之前已经做好了产品demo:

然后需要去使用在线语音接口,把文字转换为语音

然后集成到产品demo中。

所以不仅要能用语音接口,且要整合进来。

先去用目前发现的,相对来说最好用的:

百度的语音合成api

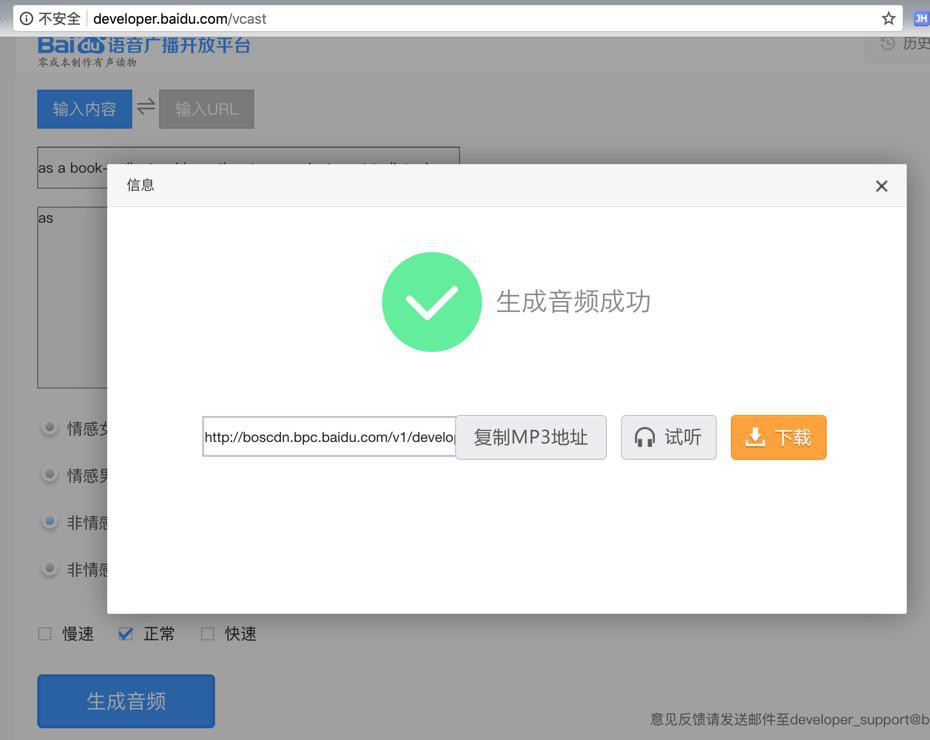

刚发现:

还可以生成临时的语音文件,以url的形式输出

但是有个缺点:

好像必须是:

标题也要有,内容也要有,才能生成

然后先去注册百度开发者账号:

接着:

详细看官网文档:

“浏览器跨域

目前合成接口支持浏览器跨域。

跨域demo示例: https://github.com/Baidu-AIP/SPEECH-TTS-CORS

由于获取token的接口不支持浏览器跨域。因此需要您从服务端获取或者每个30天手动输入更新。”

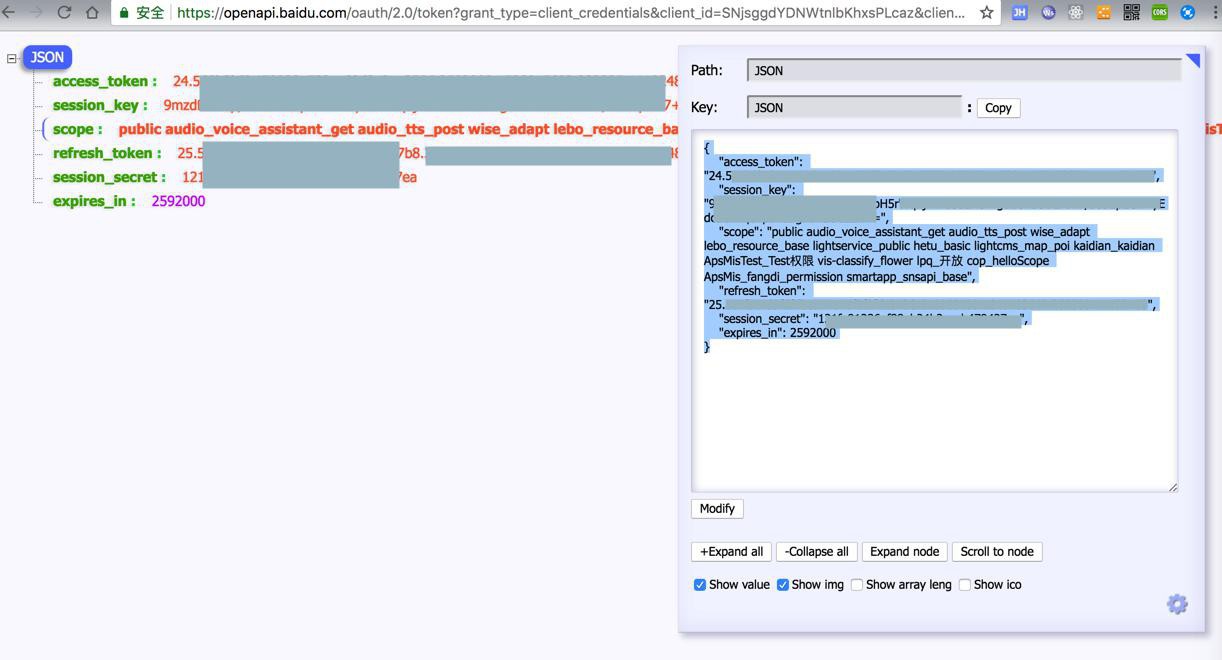

去获取token

然后去用浏览器打开:

https://openapi.baidu.com/oauth/2.0/token?grant_type=client_credentials&client_id=SNjsggdYDNWtnlbKhxsPLcaz&client_secret=47d7c02dxxxxxxxxxxxxxxe7ba

获得了token:

{

"access_token": "24.569b3b5b470938a522ce60d2e2ea2506.2592000.1528015602.282335-11192483",

"session_key": "9mzdDoR4p/oexxx0Yp9VoSgFCFOSGEIA==",

"scope": "public audio_voice_assistant_get audio_tts_post wise_adapt lebo_resource_base lightservice_public hetu_basic lightcms_map_poi kaidian_kaidian ApsMisTest_Test权限 vis-classify_flower lpq_开放 cop_helloScope ApsMis_fangdi_permission smartapp_snsapi_base",

"refresh_token": "25.5axxxx5-xxx3",

"session_secret": "12xxxa",

"expires_in": 2592000

}

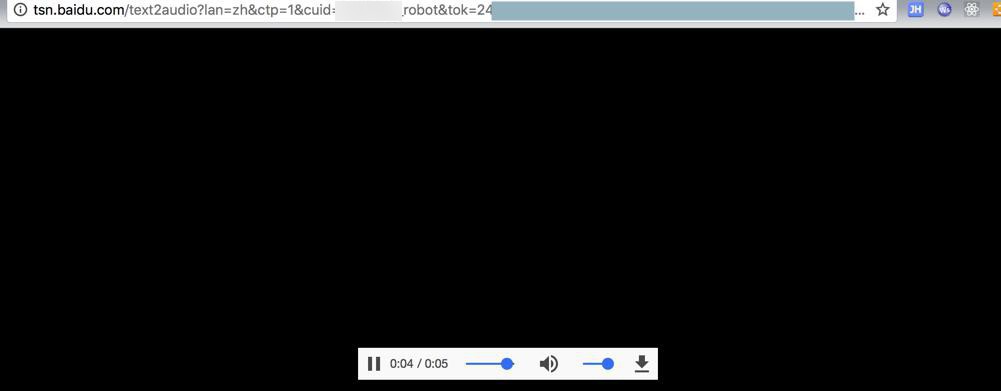

再去调用url:

http://tsn.baidu.com/text2audio?lan=zh&ctp=1&cuid=xxx_robot&tok=24.56xxx3&vol=9&per=0&spd=5&pit=5&tex=as+a+book-collector%2c+i+have+the+story+you+just+want+to+listen!

获取合成后的mp3:

很明显,此处是直接返回mp3的内容的

而不是希望的临时的mp3的临时的url

而之前的:

是可以返回临时mp3的url的

比如:

搜了下:

boscdn bpc baidu.com

感觉是百度的一个cdn的服务器

然后还有js接口上传内容上去,生成临时url的

但是貌似是百度内部自己用的?

接着需要:

1.最好把百度的token,弄成那个永久的,或者至少是1年的,而不是现在的1个月的

2.最好把生成的mp3的文件,弄成一个url可以返回给用户的

感觉需要是用自己的flask的rest的api中,封装百度的接口,给外界一个统一的接口,返回mp3的url,然后是有临时时限的 -》 那内部可以考虑把mp3保存到 /tmp 或者是redis然后设置一个expire时限?

先去弄永久的token的事情:

而关于文档,从:

发现了:

也找到了Python文档:

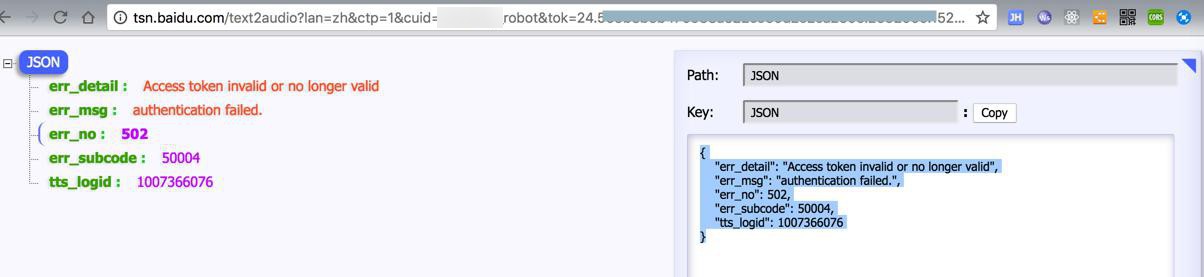

不过,对于,想要去模拟,当access_token失效时,百度接口会返回什么

突然想到,可以用刚才已经被refresh_token刷新后,而失效的之前的access_token,去调用看看,返回什么

结果之前的token,竟然还能用:

那算了,把token值随便改一下,去尝试模拟一个无效的token

结果返回:

{

"err_detail": "Access token invalid or no longer valid",

"err_msg": "authentication failed.",

"err_no": 502,

"err_subcode": 50004,

"tts_logid": 1007366076

}

错误码解释

错误码 | 含义 |

500 | 不支持输入 |

501 | 输入参数不正确 |

502 | token验证失败 |

503 | 合成后端错误 |

百度 err_subcode 50004

百度 authentication failed 50004

50004

Passport Not Login

未登录百度账号passport

400

那目前就可以暂定为如下思路了:

用Flask去封装百度的语音合成的api

然后内部使用Python的SDK(用pip去安装)

如果返回dict,且发现是err_no是502的话,则确定是token无效或过期

则使用refresh_token去重新刷新获得有效的token

重新再去尝试一次

然后正常的话,返回得到mp3的数据

再考虑如何处理,放到哪里,生成一个外部可以直接访问的url

此处,参考:

发现是:

// 参数含义请参考 https://ai.baidu.com/docs#/TTS-API/41ac79a6

audio = btts({

。。。

onSuccess: function(htmlAudioElement) {

audio = htmlAudioElement;

playBtn.innerText = '播放';

},发现是btts直接返回了audio这个html的element?

去看:

发现是:

document.body.append(audio);

audio.setAttribute('src', URL.createObjectURL(xhr.response));好像是创建了本地的文件了?

去搜:

URL.createObjectURL

“File对象,就是一个文件,比如我用input type=”file”标签来上传文件,那么里面的每个文件都是一个File对象.

Blob对象,就是二进制数据,比如通过new Blob()创建的对象就是Blob对象.又比如,在XMLHttpRequest里,如果指定responseType为blob,那么得到的返回值也是一个blob对象.”

所以此处就是返回了mp3的二进制数据,是blob格式,传递给createObjectURL,生成了临时的文件,可以用来播放了

-》那么我后续封装出来的接口,倒是也可以考虑支持两种:

- 直接返回mp3的url

- 返回mp3的二进制数据文件

而返回的类型,可以通过输入参数指定

然后就是去:

接着就可以继续去:

接着就是去:

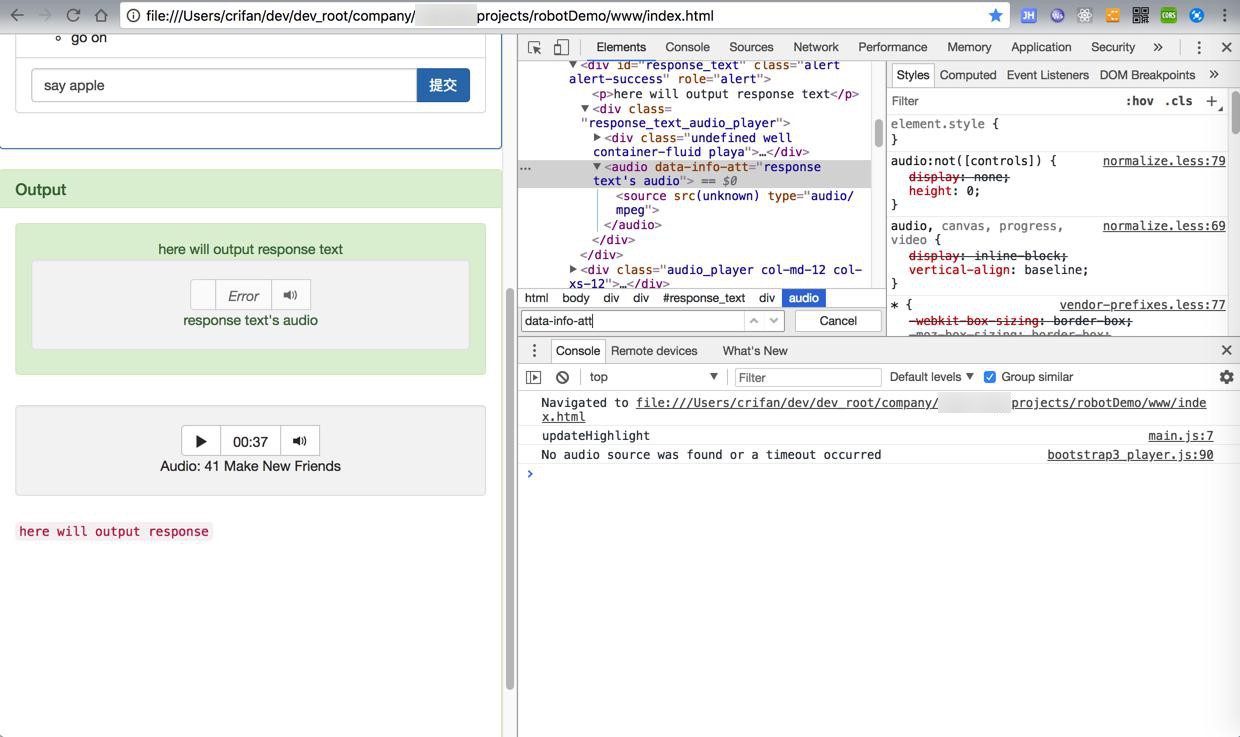

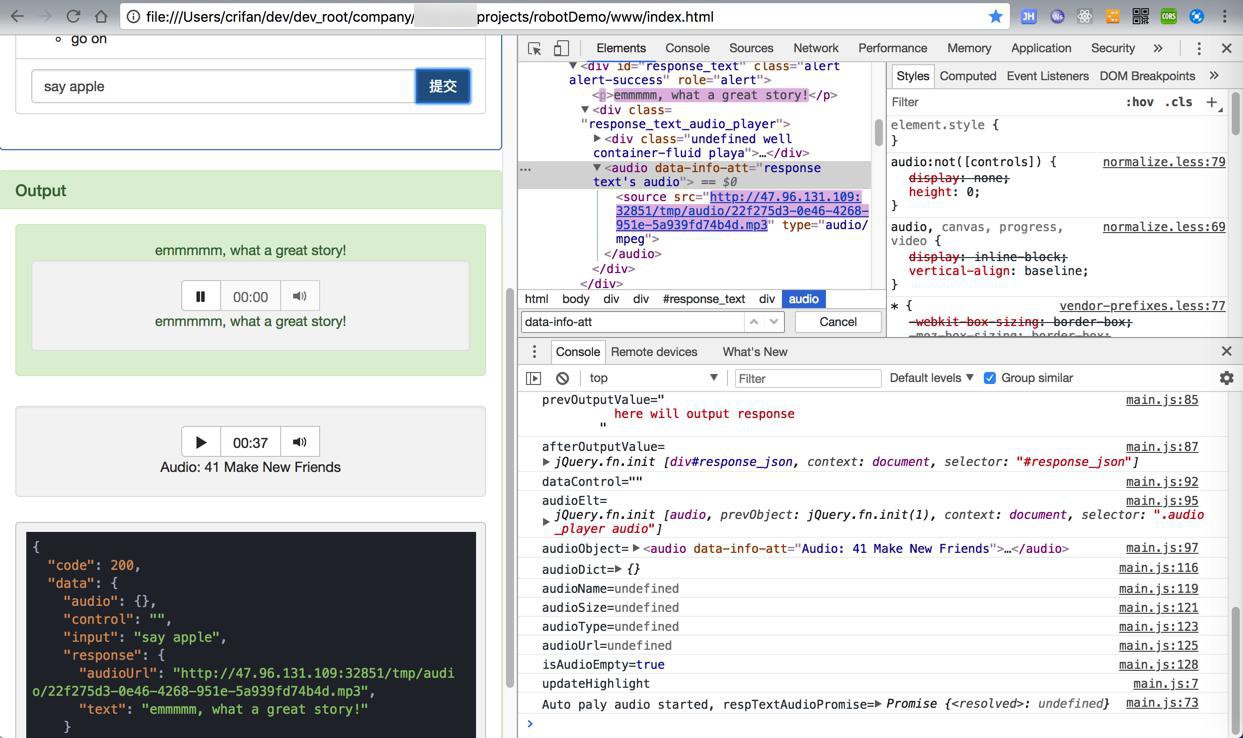

前端web页面中把相关的之前输出text的接口,更新为,解析返回的mp3的(临时文件)的url,以及调用播放器播放出来:

var curResponseDict = respJsonObj["data"]["response"];

console.log("curResponseDict=%s", curResponseDict);

var curResponseText = curResponseDict["text"];

console.log("curResponseText=%s", curResponseText);

$('#response_text p').text(curResponseText);

var curResponseAudioUrl = curResponseDict["audioUrl"];

console.log("curResponseAudioUrl=%s", curResponseAudioUrl);

if (curResponseAudioUrl) {

console.log("now play the response text's audio %s", curResponseAudioUrl);

var respTextAudioObj = $(".response_text_audio_player audio")[0];

console.log("respTextAudioObj=%o", respTextAudioObj);

$(".response_text_audio_player .col-sm-offset-1").text(curResponseText);

$(".response_text_audio_player audio source").attr("src", curResponseAudioUrl);

respTextAudioObj.load();

console.log("has load respTextAudioObj=%o", respTextAudioObj);

respTextAudioPromise = respTextAudioObj.play();

// console.log("respTextAudioPromise=%o", respTextAudioPromise);

if (respTextAudioPromise !== undefined) {

respTextAudioPromise.then(() => {

// Auto-play started

console.log("Auto paly audio started, respTextAudioPromise=%o", respTextAudioPromise);

}).catch(error => {

// Auto-play was prevented

// Show a UI element to let the user manually start playback

console.error("play response text's audio promise error=%o", error);

//NotAllowedError: The request is not allowed by the user agent or the platform in the current context, possibly because the user denied permission.

});

}

}已经可以去播放返回的text的audio了:

然后等个1秒左右,再播放被点播的文件

所以先要去解决:

然后故意再去优化,当出错时显示错误信息,期间:

【已解决】js中如何实现字符串拼接或格式化

以及:

【已解决】js的jquery的ajax的get返回的error错误的详细信息

【总结】

最后实现了想要的效果

后端:

Flask的REST API和百度接口的初始化和调用:

app.py

from flask import Flask

from flask import jsonify

from flask_restful import Resource, Api, reqparse

import logging

from logging.handlers import RotatingFileHandler

from bson.objectid import ObjectId

from flask import send_file

import os

import io

import re

from urllib.parse import quote

import json

import uuid

from flask_cors import CORS

import requests

from celery import Celery

################################################################################

# Global Definitions

################################################################################

"""

http://ai.baidu.com/docs#/TTS-API/top

500 不支持输入

501 输入参数不正确

502 token验证失败

503 合成后端错误

"""

BAIDU_ERR_NOT_SUPPORT_PARAM = 500

BAIDU_ERR_PARAM_INVALID = 501

BAIDU_ERR_TOKEN_INVALID = 502

BAIDU_ERR_BACKEND_SYNTHESIS_FAILED = 503

################################################################################

# Global Variables

################################################################################

log = None

app = None

"""

{

"access_token": "24.569bcccccccc11192484",

"session_key": "9mxxxxxxEIB==",

"scope": "public audio_voice_assistant_get audio_tts_post wise_adapt lebo_resource_base lightservice_public hetu_basic lightcms_map_poi kaidian_kaidian ApsMisTest_Test权限 vis-classify_flower lpq_开放 cop_helloScope ApsMis_fangdi_permission smartapp_snsapi_base",

"refresh_token": "25.6acfxxxx2483",

"session_secret": "121xxxxxfa",

"expires_in": 2592000

}

"""

gCurBaiduRespDict = {} # get baidu token resp dict

gTempAudioFolder = ""

################################################################################

# Global Function

################################################################################

def generateUUID(prefix = ""):

generatedUuid4 = uuid.uuid4()

generatedUuid4Str = str(generatedUuid4)

newUuid = prefix + generatedUuid4Str

return newUuid

#----------------------------------------

# Audio Synthesis / TTS

#----------------------------------------

def createAudioTempFolder():

"""create foler to save later temp audio files"""

global log, gTempAudioFolder

# init audio temp folder for later store temp audio file

audioTmpFolder = app.config["AUDIO_TEMP_FOLDER"]

log.info("audioTmpFolder=%s", audioTmpFolder)

curFolderAbsPath = os.getcwd() #'/Users/crifan/dev/dev_root/company/xxx/projects/robotDemo/server'

log.info("curFolderAbsPath=%s", curFolderAbsPath)

audioTmpFolderFullPath = os.path.join(curFolderAbsPath, audioTmpFolder)

log.info("audioTmpFolderFullPath=%s", audioTmpFolderFullPath)

if not os.path.exists(audioTmpFolderFullPath):

os.makedirs(audioTmpFolderFullPath)

log.info("++++++ Created tmp audio folder: %s", audioTmpFolderFullPath)

gTempAudioFolder = audioTmpFolderFullPath

log.info("gTempAudioFolder=%s", gTempAudioFolder)

def initAudioSynthesis():

"""

init audio synthesis related:

init token

:return:

"""

getBaiduToken()

createAudioTempFolder()

def getBaiduToken():

"""get baidu token"""

global app, log, gCurBaiduRespDict

getBaiduTokenUrlTemplate = "

https://openapi.baidu.com/oauth/2.0/token?grant_type=client_credentials&client_id=%s&client_secret=%s

"

getBaiduTokenUrl = getBaiduTokenUrlTemplate % (app.config["BAIDU_API_KEY"], app.config["BAIDU_SECRET_KEY"])

log.info("getBaiduTokenUrl=%s", getBaiduTokenUrl) #

https://openapi.baidu.com/oauth/2.0/token?grant_type=client_credentials&client_id=xxxz&client_secret=xxxx

resp = requests.get(getBaiduTokenUrl)

log.info("resp=%s", resp)

respJson = resp.json()

log.info("respJson=%s", respJson) #{'access_token': '24.xxx.2592000.1528609320.282335-11192484', 'session_key': 'xx+I/xx+6KwgZmw==', 'scope': 'public audio_voice_assistant_get audio_tts_post wise_adapt lebo_resource_base lightservice_public hetu_basic lightcms_map_poi kaidian_kaidian ApsMisTest_Test权限 vis-classify_flower lpq_开放 cop_helloScope ApsMis_fangdi_permission smartapp_snsapi_base', 'refresh_token': '25.xxx', 'session_secret': 'cxxx6e', 'expires_in': 2592000}

if resp.status_code == 200:

gCurBaiduRespDict = respJson

log.info("get baidu token resp: %s", gCurBaiduRespDict)

else:

log.error("error while get baidu token: %s", respJson)

#{'error': 'invalid_client', 'error_description': 'Client authentication failed'}

#{'error': 'invalid_client', 'error_description': 'unknown client id'}

#{'error': 'unsupported_grant_type', 'error_description': 'The authorization grant type is not supported'}

def refreshBaiduToken():

"""refresh baidu token when current token invalid"""

global app, log, gCurBaiduRespDict

if gCurBaiduRespDict:

refreshBaiduTokenUrlTemplate = "

https://openapi.baidu.com/oauth/2.0/token?grant_type=refresh_token&refresh_token=%s&client_id=%s&client_secret=%s

"

refreshBaiduTokenUrl = refreshBaiduTokenUrlTemplate % (gCurBaiduRespDict["refresh_token"], app.config["BAIDU_API_KEY"], app.config["BAIDU_SECRET_KEY"])

log.info("refreshBaiduTokenUrl=%s", refreshBaiduTokenUrl) #

https://openapi.baidu.com/oauth/2.0/token?grant_type=refresh_token&refresh_token=25.1xxxx.xx.1841379583.282335-11192483&client_id=Sxxxxz&client_secret=47dxxxxa

resp = requests.get(refreshBaiduTokenUrl)

log.info("resp=%s", resp)

respJson = resp.json()

log.info("respJson=%s", respJson)

if resp.status_code == 200:

gCurBaiduRespDict = respJson

log.info("Ok to refresh baidu token response: %s", gCurBaiduRespDict)

else:

log.error("error while refresh baidu token: %s", respJson)

else:

log.error("Can't refresh baidu token for previous not get token")

def baiduText2Audio(unicodeText):

"""call baidu text2audio to generate mp3 audio from text"""

global app, log, gCurBaiduRespDict

log.info("baiduText2Audio: unicodeText=%s", unicodeText)

isOk = False

mp3BinData = None

errNo = 0

errMsg = "Unknown error"

if not gCurBaiduRespDict:

errMsg = "Need get baidu token before call text2audio"

return isOk, mp3BinData, errNo, errMsg

utf8Text = unicodeText.encode("utf-8")

log.info("utf8Text=%s", utf8Text)

encodedUtf8Text = quote(unicodeText)

log.info("encodedUtf8Text=%s", encodedUtf8Text)

#

http://ai.baidu.com/docs#/TTS-API/top

tex = encodedUtf8Text #合成的文本,使用UTF-8编码。小于512个中文字或者英文数字。(文本在百度服务器内转换为GBK后,长度必须小于1024字节)

tok = gCurBaiduRespDict["access_token"] #开放平台获取到的开发者access_token(见上面的“鉴权认证机制”段落)

cuid = app.config["FLASK_APP_NAME"] #用户唯一标识,用来区分用户,计算UV值。建议填写能区分用户的机器 MAC 地址或 IMEI 码,长度为60字符以内

ctp = 1 #客户端类型选择,web端填写固定值1

lan = "zh" #固定值zh。语言选择,目前只有中英文混合模式,填写固定值zh

spd = 5 #语速,取值0-9,默认为5中语速

pit = 5 #音调,取值0-9,默认为5中语调

# vol = 5 #音量,取值0-9,默认为5中音量

vol = 9

per = 0 #发音人选择, 0为普通女声,1为普通男生,3为情感合成-度逍遥,4为情感合成-度丫丫,默认为普通女声

getBaiduSynthesizedAudioTemplate = "

http://tsn.baidu.com/text2audio?lan=%s&ctp=%s&cuid=%s&tok=%s&vol=%s&per=%s&spd=%s&pit=%s&tex=%s

"

getBaiduSynthesizedAudioUrl = getBaiduSynthesizedAudioTemplate % (lan, ctp, cuid, tok, vol, per, spd, pit, tex)

log.info("getBaiduSynthesizedAudioUrl=%s", getBaiduSynthesizedAudioUrl) #

http://tsn.baidu.com/text2audio?lan=zh&ctp=1&cuid=RobotQA&tok=24.5f056b15e9d5da63256bac89f64f61b5.2592000.1528609737.282335-11192483&vol=5&per=0&spd=5&pit=5&tex=as%20a%20book-collector%2C%20i%20have%20the%20story%20you%20just%20want%20to%20listen%21

resp = requests.get(getBaiduSynthesizedAudioUrl)

log.info("resp=%s", resp)

respContentType = resp.headers["Content-Type"]

respContentTypeLowercase = respContentType.lower() #'audio/mp3'

log.info("respContentTypeLowercase=%s", respContentTypeLowercase)

if respContentTypeLowercase == "audio/mp3":

mp3BinData = resp.content

log.info("resp content is binary data of mp3, length=%d", len(mp3BinData))

isOk = True

errMsg = ""

elif respContentTypeLowercase == "application/json":

"""

{

'err_detail': 'Invalid params per or lan!',

'err_msg': 'parameter error.',

'err_no': 501,

'err_subcode': 50000,

'tts_logid': 642798357

}

{

'err_detail': 'Invalid params per&pdt!',

'err_msg': 'parameter error.',

'err_no': 501,

'err_subcode': 50000,

'tts_logid': 1675521246

}

{

'err_detail': 'Access token invalid or no longer valid',

'err_msg': 'authentication failed.',

'err_no': 502,

'err_subcode': 50004,

'tts_logid': 4221215043

}

"""

log.info("resp content is json -> occur error")

isOk = False

respDict = resp.json()

log.info("respDict=%s", respDict)

errNo = respDict["err_no"]

errMsg = respDict["err_msg"] + " " + respDict["err_detail"]

else:

isOk = False

errMsg = "Unexpected response content-type: %s" % respContentTypeLowercase

return isOk, mp3BinData, errNo, errMsg

def doAudioSynthesis(unicodeText):

"""

do audio synthesis from unicode text

if failed for token invalid/expired, will refresh token to do one more retry

"""

global app, log, gCurBaiduRespDict

isOk = False

audioBinData = None

errMsg = ""

# # for debug

# gCurBaiduRespDict["access_token"] = "99.569b3b5b470938a522ce60d2e2ea2506.2592000.1528015602.282335-11192483"

log.info("doAudioSynthesis: unicodeText=%s", unicodeText)

isOk, audioBinData, errNo, errMsg = baiduText2Audio(unicodeText)

log.info("isOk=%s, errNo=%d, errMsg=%s", isOk, errNo, errMsg)

if isOk:

errMsg = ""

log.info("got synthesized audio binary data length=%d", len(audioBinData))

else:

if errNo == BAIDU_ERR_TOKEN_INVALID:

log.warning("Token invalid -> refresh token")

refreshBaiduToken()

isOk, audioBinData, errNo, errMsg = baiduText2Audio(unicodeText)

log.info("after refresh token: isOk=%ss, errNo=%s, errMsg=%s", isOk, errNo, errMsg)

else:

log.warning("try synthesized audio occur error: errNo=%d, errMsg=%s", errNo, errMsg)

audioBinData = None

log.info("return isOk=%s, errMsg=%s", isOk, errMsg)

if audioBinData:

log.info("audio binary bytes=%d", len(audioBinData))

return isOk, audioBinData, errMsg

def testAudioSynthesis():

global app, log, gTempAudioFolder

testInputUnicodeText = u"as a book-collector, i have the story you just want to listen!"

isOk, audioBinData, errMsg = doAudioSynthesis(testInputUnicodeText)

if isOk:

audioBinDataLen = len(audioBinData)

log.info("Now will save audio binary data %d bytes to file", audioBinDataLen)

# 1. save mp3 binary data into tmp file

newUuid = generateUUID()

log.info("newUuid=%s", newUuid)

tempFilename = newUuid + ".mp3"

log.info("tempFilename=%s", tempFilename)

if not gTempAudioFolder:

createAudioTempFolder()

tempAudioFullname = os.path.join(gTempAudioFolder, tempFilename) #'/Users/crifan/dev/dev_root/company/xxx/projects/robotDemo/server/tmp/audio/2aba73d1-f8d0-4302-9dd3-d1dbfad44458.mp3'

log.info("tempAudioFullname=%s", tempAudioFullname)

with open(tempAudioFullname, 'wb') as tmpAudioFp:

log.info("tmpAudioFp=%s", tmpAudioFp)

tmpAudioFp.write(audioBinData)

tmpAudioFp.close()

log.info("Done to write audio data into file of %d bytes", audioBinDataLen)

# 2. use celery to delay delete tmp file

else:

log.warning("Fail to get synthesis audio for errMsg=%s", errMsg)

#----------------------------------------

# Flask API

#----------------------------------------

def sendFile(fileBytes, contentType, outputFilename):

"""Flask API use this to send out file (to browser, browser can directly download file)"""

return send_file(

io.BytesIO(fileBytes),

# io.BytesIO(fileObj.read()),

mimetype=contentType,

as_attachment=True,

attachment_filename=outputFilename

)

################################################################################

# Global Init App

################################################################################

app = Flask(__name__)

CORS(app)

# app.config.from_object('config.DevelopmentConfig')

app.config.from_object('config.ProductionConfig')

logFormatterStr = app.config["LOG_FORMAT"]

logFormatter = logging.Formatter(logFormatterStr)

fileHandler = RotatingFileHandler(

app.config['LOG_FILE_FILENAME'],

maxBytes=app.config["LOF_FILE_MAX_BYTES"],

backupCount=app.config["LOF_FILE_BACKUP_COUNT"],

encoding="UTF-8")

fileHandler.setLevel(logging.DEBUG)

fileHandler.setFormatter(logFormatter)

app.logger.addHandler(fileHandler)

app.logger.setLevel(logging.DEBUG) # set root log level

log = app.logger

log.info("app=%s", app)

# log.debug("app.config=%s", app.config)

api = Api(app)

log.info("api=%s", api)

celeryApp = Celery(app.name, broker=app.config['CELERY_BROKER_URL'])

celeryApp.conf.update(app.config)

log.info("celeryApp=%s", celeryApp)

aiContext = Context()

log.info("aiContext=%s", aiContext)

initAudioSynthesis()

# testAudioSynthesis()

...

#----------------------------------------

# Celery tasks

#----------------------------------------

# @celeryApp.task()

@celeryApp.task

# @celeryApp.task(name=app.config["CELERY_TASK_NAME"] + ".deleteTmpAudioFile")

def deleteTmpAudioFile(filename):

"""

delete tmp audio file from filename

eg: 98fc7c46-7aa0-4dd7-aa9d-89fdf516abd6.mp3

"""

global log

log.info("deleteTmpAudioFile: filename=%s", filename)

audioTmpFolder = app.config["AUDIO_TEMP_FOLDER"]

# audioTmpFolder = "tmp/audio"

log.info("audioTmpFolder=%s", audioTmpFolder)

curFolderAbsPath = os.getcwd() #'/Users/crifan/dev/dev_root/company/xxx/projects/robotDemo/server'

log.info("curFolderAbsPath=%s", curFolderAbsPath)

audioTmpFolderFullPath = os.path.join(curFolderAbsPath, audioTmpFolder)

log.info("audioTmpFolderFullPath=%s", audioTmpFolderFullPath)

tempAudioFullname = os.path.join(audioTmpFolderFullPath, filename)

#'/Users/crifan/dev/dev_root/company/xxx/projects/robotDemo/server/tmp/audio/2aba73d1-f8d0-4302-9dd3-d1dbfad44458.mp3'

if os.path.isfile(tempAudioFullname):

os.remove(tempAudioFullname)

log.info("Ok to delete file %s", tempAudioFullname)

else:

log.warning("No need to remove for not exist file %s", tempAudioFullname)

# log.info("deleteTmpAudioFile=%s", deleteTmpAudioFile)

# log.info("deleteTmpAudioFile.name=%s", deleteTmpAudioFile.name)

# log.info("celeryApp.tasks=%s", celeryApp.tasks)

#----------------------------------------

# Rest API

#----------------------------------------

class RobotQaAPI(Resource):

def processResponse(self, respDict):

"""

process response dict before return

generate audio for response text part

"""

global log, gTempAudioFolder

tmpAudioUrl = ""

unicodeText = respDict["data"]["response"]["text"]

log.info("unicodeText=%s")

if not unicodeText:

log.info("No response text to do audio synthesis")

return jsonify(respDict)

isOk, audioBinData, errMsg = doAudioSynthesis(unicodeText)

if isOk:

audioBinDataLen = len(audioBinData)

log.info("audioBinDataLen=%s", audioBinDataLen)

# 1. save mp3 binary data into tmp file

newUuid = generateUUID()

log.info("newUuid=%s", newUuid)

tempFilename = newUuid + ".mp3"

log.info("tempFilename=%s", tempFilename)

if not gTempAudioFolder:

createAudioTempFolder()

tempAudioFullname = os.path.join(gTempAudioFolder, tempFilename)

log.info("tempAudioFullname=%s", tempAudioFullname) # 'xxx/tmp/audio/2aba73d1-f8d0-4302-9dd3-d1dbfad44458.mp3'

with open(tempAudioFullname, 'wb') as tmpAudioFp:

log.info("tmpAudioFp=%s", tmpAudioFp)

tmpAudioFp.write(audioBinData)

tmpAudioFp.close()

log.info("Saved %d bytes data into temp audio file %s", audioBinDataLen, tempAudioFullname)

# 2. use celery to delay delete tmp file

delayTimeToDelete = app.config["CELERY_DELETE_TMP_AUDIO_FILE_DELAY"]

deleteTmpAudioFile.apply_async([tempFilename], countdown=delayTimeToDelete)

log.info("Delay %s seconds to delete %s", delayTimeToDelete, tempFilename)

# generate temp audio file url

# /tmp/audio

tmpAudioUrl = "http://%s:%d/tmp/audio/%s" % (

app.config["FILE_URL_HOST"],

app.config["FLASK_PORT"],

tempFilename)

log.info("tmpAudioUrl=%s", tmpAudioUrl)

respDict["data"]["response"]["audioUrl"] = tmpAudioUrl

else:

log.warning("Fail to get synthesis audio for errMsg=%s", errMsg)

log.info("respDict=%s", respDict)

return jsonify(respDict)

def get(self):

respDict = {

"code": 200,

"message": "generate response ok",

"data": {

"input": "",

"response": {

"text": "",

"audioUrl": ""

},

"control": "",

"audio": {}

}

}

parser = reqparse.RequestParser()

# i want to hear the story of Baby Sister Says No

parser.add_argument('input', type=str, help="input words")

log.info("parser=%s", parser)

parsedArgs = parser.parse_args() #

log.info("parsedArgs=%s", parsedArgs)

if not parsedArgs:

respDict["data"]["response"]["text"] = "Can not recognize input"

return self.processResponse(respDict)

inputStr = parsedArgs["input"]

log.info("inputStr=%s", inputStr)

if not inputStr:

respDict["data"]["response"]["text"] = "Can not recognize parameter input"

return self.processResponse(respDict)

respDict["data"]["input"] = inputStr

aiResult = QueryAnalyse(inputStr, aiContext)

log.info("aiResult=%s", aiResult)

if aiResult["response"]:

respDict["data"]["response"]["text"] = aiResult["response"]

if aiResult["control"]:

respDict["data"]["control"] = aiResult["control"]

log.info('respDict["data"]=%s', respDict["data"])

audioFileIdStr = aiResult["mediaId"]

log.info("audioFileIdStr=%s", audioFileIdStr)

if audioFileIdStr:

audioFileObjectId = ObjectId(audioFileIdStr)

log.info("audioFileObjectId=%s", audioFileObjectId)

if fsCollection.exists(audioFileObjectId):

audioFileObj = fsCollection.get(audioFileObjectId)

log.info("audioFileObj=%s", audioFileObj)

encodedFilename = quote(audioFileObj.filename)

log.info("encodedFilename=%s", encodedFilename)

respDict["data"]["audio"] = {

"contentType": audioFileObj.contentType,

"name": audioFileObj.filename,

"size": audioFileObj.length,

"url": "http://%s:%d/files/%s/%s" %

(app.config["FILE_URL_HOST"],

app.config["FLASK_PORT"],

audioFileObj._id,

encodedFilename)

}

log.info("respDict=%s", respDict)

return self.processResponse(respDict)

else:

log.info("Can not find file from id %s", audioFileIdStr)

respDict["data"]["audio"] = {}

return self.processResponse(respDict)

else:

log.info("Not response file id")

respDict["data"]["audio"] = {}

return self.processResponse(respDict)

class GridfsAPI(Resource):

def get(self, fileId, fileName=None):

log.info("fileId=%s, file_name=%s", fileId, fileName)

fileIdObj = ObjectId(fileId)

log.info("fileIdObj=%s", fileIdObj)

if not fsCollection.exists({"_id": fileIdObj}):

respDict = {

"code": 404,

"message": "Can not find file from object id %s" % (fileId),

"data": {}

}

return jsonify(respDict)

fileObj = fsCollection.get(fileIdObj)

log.info("fileObj=%s, filename=%s, chunkSize=%s, length=%s, contentType=%s",

fileObj, fileObj.filename, fileObj.chunk_size, fileObj.length, fileObj.content_type)

log.info("lengthInMB=%.2f MB", float(fileObj.length / (1024 * 1024)))

fileBytes = fileObj.read()

log.info("len(fileBytes)=%s", len(fileBytes))

outputFilename = fileObj.filename

if fileName:

outputFilename = fileName

log.info("outputFilename=%s", outputFilename)

return sendFile(fileBytes, fileObj.content_type, outputFilename)

class TmpAudioAPI(Resource):

def get(self, filename=None):

global gTempAudioFolder

log.info("TmpAudioAPI: filename=%s", filename)

tmpAudioFullPath = os.path.join(gTempAudioFolder, filename)

log.info("tmpAudioFullPath=%s", tmpAudioFullPath)

if not os.path.isfile(tmpAudioFullPath):

log.warning("Not exists file %s", tmpAudioFullPath)

respDict = {

"code": 404,

"message": "Can not find temp audio file %s" % filename,

"data": {}

}

return jsonify(respDict)

fileSize = os.path.getsize(tmpAudioFullPath)

log.info("fileSize=%s", fileSize)

with open(tmpAudioFullPath, "rb") as tmpAudioFp:

fileBytes = tmpAudioFp.read()

log.info("read out fileBytes length=%s", len(fileBytes))

outputFilename = filename

# contentType = "audio/mp3" # chrome use this

contentType = "audio/mpeg" # most common and compatible

return sendFile(fileBytes, contentType, outputFilename)

api.add_resource(PlaySongAPI, '/playsong', endpoint='playsong')

api.add_resource(RobotQaAPI, '/qa', endpoint='qa')

api.add_resource(GridfsAPI, '/files/<fileId>', '/files/<fileId>/<fileName>', endpoint='gridfs')

api.add_resource(TmpAudioAPI, '/tmp/audio/<filename>', endpoint='TmpAudio')

if __name__ == "__main__":

app.run(

host=app.config["FLASK_HOST"],

port=app.config["FLASK_PORT"],

debug=app.config["DEBUG"]

)config.py

class BaseConfig(object): DEBUG = False FLASK_PORT = 3xxxx # FLASK_HOST = "127.0.0.1" # FLASK_HOST = "localhost" # Note: # 1. to allow external access this server # 2. make sure here gunicorn parameter "bind" is same with here !!! FLASK_HOST = "0.0.0.0" # Flask app name FLASK_APP_NAME = "RobotQA" # Log File LOG_FILE_FILENAME = "logs/" + FLASK_APP_NAME + ".log" LOG_FORMAT = "[%(asctime)s %(levelname)s %(filename)s:%(lineno)d %(funcName)s] %(message)s" LOF_FILE_MAX_BYTES = 2*1024*1024 LOF_FILE_BACKUP_COUNT = 10 # reuturn file url's host # FILE_URL_HOST = FLASK_HOST FILE_URL_HOST = "127.0.0.1" # Audio Synthesis / TTS # BAIDU_APP_ID = "1xxx3" BAIDU_API_KEY = "Sxxxxz" BAIDU_SECRET_KEY = "4xxxxxa" AUDIO_TEMP_FOLDER = "tmp/audio" # CELERY_TASK_NAME = "Celery_" + FLASK_APP_NAME # CELERY_BROKER_URL = " redis://localhost " CELERY_BROKER_URL = " redis://localhost:6379/0 " # CELERY_RESULT_BACKEND = " redis://localhost:6379/0 " # current not use result CELERY_DELETE_TMP_AUDIO_FILE_DELAY = 60 * 2 # two minutes class DevelopmentConfig(BaseConfig): # DEBUG = True # for local dev, need access remote mongodb MONGODB_HOST = "47.xx.xx.xx" FILE_URL_HOST = "127.0.0.1" class ProductionConfig(BaseConfig): FILE_URL_HOST = "47.xx.xx.xx"

前端:

main.html

<!doctype html> <html lang="en"> <head> <!-- Required meta tags --> <meta charset="utf-8"> <meta http-equiv="X-UA-Compatible" content="IE=edge"> <meta name="viewport" content="width=device-width, initial-scale=1"> <!-- <meta name="viewport" content="width=device-width, initial-scale=1, shrink-to-fit=no"> --> <!-- Bootstrap CSS --> <link rel="stylesheet" href="css/bootstrap-3.3.1/bootstrap.css"> <!-- <link rel="stylesheet" href="css/highlightjs_default.css"> --> <link rel="stylesheet" href="css/highlight_atom-one-dark.css"> <!-- <link rel="stylesheet" href="css/highlight_monokai-sublime.css"> --> <link rel="stylesheet" href="css/bootstrap3_player.css"> <link rel="stylesheet" href="css/main.css"> <title>xxx英语智能机器人演示</title> <!-- HTML5 shim and Respond.js for IE8 support of HTML5 elements and media queries --> <!-- WARNING: Respond.js doesn't work if you view the page via file:// --> <!--[if lt IE 9]> <script src=" https://oss.maxcdn.com/html5shiv/3.7.2/html5shiv.min.js "></script> <script src=" https://oss.maxcdn.com/respond/1.4.2/respond.min.js "></script> <![endif]--> </head> <div class="logo text-center"> <img class="mb-4" src="img/logo_transparent_183x160.png" alt="xxx Logo" width="72" height="72"> </div> <h2>xxx英语智能机器人</h2> <h4>xxx Bot for Kids</h4> <div class="panel panel-primary"> <div class="panel-heading"> <h3 class="panel-title">Input</h3> </div> <div class="panel-body"> <ul class="list-group"> <li class="list-group-item"> <h3 class="panel-title">Input Example</h3> <ul> <li>i want to hear the story of apple</li> <li>say story apple</li> <li>say apple</li> <li>next episode</li> <li>next</li> <li>i want you stop reading</li> <li>stop reading</li> <li>please go on</li> <li>go on</li> </ul> </li> <li class="list-group-item"> <!-- <form> <div class="form-group input_request"> <input id="inputRequest" type="text" class="form-control" placeholder="请输入您要说的话" value="i want to hear the story of apple"> </div> <div class="form-group"> <button id="submitInput" type="submit" class="btn btn-primary btn-lg col-sm-3 btn-block">提交</button> <button id="clearInput" class="btn btn-secondary btn-lg col-sm-3" type="button">清除</button> <button id="clearInput" class="btn btn-info btn-lg col-sm-3 btn-block" type="button">清除</button> </div> </form> --> <div class="row"> <div class="col-lg-12"> <div class="input-group"> <input id="inputRequest" type="text" class="form-control" placeholder="请输入您要说的话" value="say apple"> <span class="input-group-btn"> <button id="submitInput" type="submit" class="btn btn-primary" type="button">提交</button> </span> </div><!-- /input-group --> </div><!-- /.col-lg-6 --> </div> </li> </ul> </div> </div> <!-- <div class="input_example bg-light box-shadow"> <h5>Input Example:</h5> <ul> <li>i want to hear the story of apple</li> <li>next episode</li> <li>i want you stop reading</li> <li>please go on</li> </ul> </div> --> <div class="panel panel-success"> <div class="panel-heading"> <h3 class="panel-title">Output</h3> </div> <div class="panel-body"> <div id="response_text" class="alert alert-success" role="alert"> <p>here will output response text</p> <div class="response_text_audio_player"> <audio controls data-info-att="response text's audio"> <source src="" type="audio/mpeg" /> </audio> </div> </div> <div class="audio_player col-md-12 col-xs-12"> <audio controls data-info-att=""> <source src="" type="" /> </audio> </div> <!-- <div id="audio_play_prevented" class="alert alert-warning alert-dismissible col-md-12 col-xs-12"> <button type = "button" class="close" data-dismiss = "alert">x</button> <strong>Notice:</strong> Auto play prevented, please mannually click above play button to play </div> --> <!-- <div id="response_json" class="bg-light box-shadow"> <pre><code class="json">here will output response</code></pre> </div> --> <!-- <pre id="response_json"> <code class="json">here will output response</code> </pre> --> <div id="response_json"> <code class="json">here will output response</code> </div> </div> </div> <!-- Optional JavaScript --> <!-- jQuery first, then Popper.js, then Bootstrap JS --> <!-- <script src="js/jquery-3.3.1.js"></script> --> <!-- <script src=" https://ajax.googleapis.com/ajax/libs/jquery/1.11.1/jquery.min.js "></script> --> <script src="js/jquery/1.11.1/jquery-1.11.1.js"></script> <!-- <script src=" https://code.jquery.com/jquery-3.3.1.slim.min.js " integrity="sha384-q8i/X+965DzO0rT7abK41JStQIAqVgRVzpbzo5smXKp4YfRvH+8abtTE1Pi6jizo" crossorigin="anonymous"></script> --> <!-- <script src=" https://cdnjs.cloudflare.com/ajax/libs/popper.js/1.14.0/umd/popper.min.js " integrity="sha384-cs/chFZiN24E4KMATLdqdvsezGxaGsi4hLGOzlXwp5UZB1LY//20VyM2taTB4QvJ" crossorigin="anonymous"></script> --> <script src="js/popper-1.14.0/popper.min.js"></script> <!-- <script src="js/bootstrap.js"></script> --> <script src="js/bootstrap-3.3.1/bootstrap.min.js"></script> <script src="js/highlight.js"></script> <script src="js/bootstrap3_player.js"></script> <script src="js/main.js"></script> </body> </html>

main.css

.logo{

padding: 10px 2%;

}

h2{

text-align: center;

margin-top: 10px;

margin-bottom: 10px;

}

h4{

text-align: center;

margin-top: 0px;

margin-bottom: 20px;

}

form {

text-align: center;

}

.form-group {

/*padding-left: 1%;*/

/*padding-right: 1%;*/

}

.input_example {

/*padding: 1px 1%;*/

}

#response_json {

/*width: 96%;*/

height: 380px;

border-radius: 10px;

padding-top: 20px;

/*padding-left: 1%;*/

/*padding-right: 1%;*/

}

#response_text {

text-align: center !important;

font-size: 14px;

/* padding-left: 4%;

padding-right: 4%; */

}

/*pre {*/

/*padding-left: 2%;*/

/*padding-right: 2%;*/

/*}*/

.audio_player {

margin-top: 10px;

margin-bottom: 5px;

text-align: center;

padding-left: 0 !important;

padding-right: 0 !important;

}

.response_text_audio_player{

/* visibility: hidden; */

width: 100%;

/* height: 1px !important; */

height: 100px;

}

/* #audio_play_prevented {

display: none;

} */main.js

if (!String.format) {

String.format = function(format) {

var args = Array.prototype.slice.call(arguments, 1);

return format.replace(/{(\d+)}/g, function(match, number) {

return typeof args[number] != 'undefined'

? args[number]

: match

;

});

};

}

$(document).ready(function(){

$('[data-toggle="tooltip"]').tooltip();

// when got response json, update to highlight it

function updateHighlight() {

console.log("updateHighlight");

$('pre code').each(function(i, block) {

hljs.highlightBlock(block);

});

}

updateHighlight();

$("#submitInput").click(function(event){

event.preventDefault();

ajaxSubmitInput();

});

function ajaxSubmitInput() {

console.log("ajaxSubmitInput");

var inputRequest = $("#inputRequest").val();

console.log("inputRequest=%s", inputRequest);

var encodedInputRequest = encodeURIComponent(inputRequest)

console.log("encodedInputRequest=%s", encodedInputRequest);

// var qaUrl = "http://127.0.0.1:32851/qa";

var qaUrl = "http://xxx:32851/qa";

console.log("qaUrl=%s", qaUrl);

var fullQaUrl = qaUrl + "?input=" + encodedInputRequest

console.log("fullQaUrl=%s", fullQaUrl);

$.ajax({

type : "GET",

url : fullQaUrl,

success: function(respJsonObj){

console.log("respJsonObj=%o", respJsonObj);

// var respnJsonStr = JSON.stringify(respJsonObj);

//var beautifiedJespnJsonStr = JSON.stringify(respJsonObj, null, '\t');

var beautifiedJespnJsonStr = JSON.stringify(respJsonObj, null, 2);

console.log("beautifiedJespnJsonStr=%s", beautifiedJespnJsonStr);

var prevOutputValue = $('#response_json').text();

console.log("prevOutputValue=%o", prevOutputValue);

var afterOutputValue = $('#response_json').html('<pre><code class="json">' + beautifiedJespnJsonStr + "</code></pre>");

console.log("afterOutputValue=%o", afterOutputValue);

updateHighlight();

var curResponseDict = respJsonObj["data"]["response"];

console.log("curResponseDict=%s", curResponseDict);

var curResponseText = curResponseDict["text"];

console.log("curResponseText=%s", curResponseText);

$('#response_text p').text(curResponseText);

var curResponseAudioUrl = curResponseDict["audioUrl"];

console.log("curResponseAudioUrl=%s", curResponseAudioUrl);

if (curResponseAudioUrl) {

console.log("now play the response text's audio %s", curResponseAudioUrl);

var respTextAudioObj = $(".response_text_audio_player audio")[0];

console.log("respTextAudioObj=%o", respTextAudioObj);

$(".response_text_audio_player .col-sm-offset-1").text(curResponseText);

$(".response_text_audio_player audio source").attr("src", curResponseAudioUrl);

respTextAudioObj.load();

console.log("has load respTextAudioObj=%o", respTextAudioObj);

respTextAudioObj.onended = function() {

console.log("play response text's audio ended");

var dataControl = respJsonObj["data"]["control"];

console.log("dataControl=%o", dataControl);

var audioElt = $(".audio_player audio");

console.log("audioElt=%o", audioElt);

var audioObject = audioElt[0];

console.log("audioObject=%o", audioObject);

var playAudioPromise = undefined;

if (dataControl === "stop") {

//audioObject.stop();

audioObject.pause();

console.log("has pause audioObject=%o", audioObject);

} else if (dataControl === "continue") {

// // audioObject.load();

// audioObject.play();

// // audioObject.continue();

// console.log("has load and play audioObject=%o", audioObject);

playAudioPromise = audioObject.play();

}

if (respJsonObj["data"]["audio"]) {

var audioDict = respJsonObj["data"]["audio"];

console.log("audioDict=%o", audioDict);

var audioName = audioDict["name"];

console.log("audioName=%o", audioName);

var audioSize = audioDict["size"];

console.log("audioSize=%o", audioSize);

var audioType = audioDict["contentType"];

console.log("audioType=%o", audioType);

var audioUrl = audioDict["url"];

console.log("audioUrl=%o", audioUrl);

var isAudioEmpty = (!audioName && !audioSize && !audioType && !audioUrl)

console.log("isAudioEmpty=%o", isAudioEmpty);

if (isAudioEmpty) {

// var pauseAudioResult = audioObject.pause();

// console.log("pauseAudioResult=%o", pauseAudioResult);

// audioElt.attr("data-info-att", "");

// $(".col-sm-offset-1").text("");

} else {

if (audioName) {

audioElt.attr("data-info-att", audioName);

$(".audio_player .col-sm-offset-1").text(audioName);

}

if (audioType) {

$(".audio_player audio source").attr("type", audioType);

}

if (audioUrl) {

$(".audio_player audio source").attr("src", audioUrl);

audioObject.load();

console.log("has load audioObject=%o", audioObject);

}

console.log("dataControl=%s,audioUrl=%s", dataControl, audioUrl);

if ((dataControl === "") && audioUrl) {

playAudioPromise = audioObject.play();

} else if ((dataControl === "next") && (audioUrl)) {

playAudioPromise = audioObject.play();

}

}

} else {

console.log("empty respJsonObj['data']['audio']=%o", respJsonObj["data"]["audio"]);

}

if (playAudioPromise !== undefined) {

playAudioPromise.then(() => {

// Auto-play started

console.log("Auto paly audio started, playAudioPromise=%o", playAudioPromise);

//for debug

// showAudioPlayPreventedNotice();

}).catch(error => {

// Auto-play was prevented

// Show a UI element to let the user manually start playback

showAudioPlayPreventedNotice();

console.error("play audio promise error=%o", error);

//NotAllowedError: The request is not allowed by the user agent or the platform in the current context, possibly because the user denied permission.

});

}

}

respTextAudioPromise = respTextAudioObj.play();

// console.log("respTextAudioPromise=%o", respTextAudioPromise);

if (respTextAudioPromise !== undefined) {

respTextAudioPromise.then(() => {

// Auto-play started

console.log("Auto paly audio started, respTextAudioPromise=%o", respTextAudioPromise);

}).catch(error => {

// Auto-play was prevented

// Show a UI element to let the user manually start playback

console.error("play response text's audio promise error=%o", error);

//NotAllowedError: The request is not allowed by the user agent or the platform in the current context, possibly because the user denied permission.

});

}

}

},

error : function(jqXHR, textStatus, errorThrown) {

console.error("jqXHR=%o, textStatus=%s, errorThrown=%s", jqXHR, textStatus, errorThrown);

var errDetail = String.format("status={0}\n\tstatusText={1}\n\tresponseText={2}", jqXHR.status, jqXHR.statusText, jqXHR.responseText);

var errStr = String.format("GET: {0}\nERROR:\t{1}", fullQaUrl, errDetail);

// $('#response_text p').text(errStr);

var responseError = $('#response_json').html('<pre><code class="html">' + errStr + "</code></pre>");

console.log("responseError=%o", responseError);

updateHighlight();

}

});

}

function showAudioPlayPreventedNotice(){

console.log("showAudioPlayPreventedNotice");

// var prevDisplayValue = $("#audio_play_prevented").css("display");

// console.log("prevDisplayValue=%o", prevDisplayValue);

// $("#audio_play_prevented").css({"display":"block"});

var curAudioPlayPreventedNoticeEltHtml = $("#audio_play_prevented").html();

console.log("curAudioPlayPreventedNoticeEltHtml=%o", curAudioPlayPreventedNoticeEltHtml);

if (curAudioPlayPreventedNoticeEltHtml !== undefined) {

console.log("already exist audio play prevented notice, so not insert again");

} else {

var audioPlayPreventedNoticeHtml = '<div id="audio_play_prevented" class="alert alert-warning alert-dismissible col-md-12 col-xs-12"><button type = "button" class="close" data-dismiss = "alert">x</button><strong>Notice:</strong> Auto play prevented, please mannually click above play button to play</div>';

console.log("audioPlayPreventedNoticeHtml=%o", audioPlayPreventedNoticeHtml);

$(".audio_player").append(audioPlayPreventedNoticeHtml);

}

}

$("#clearInput").click(function(event){

// event.preventDefault();

console.log("event=%o", event);

$('#inputRequest').val("");

$('#response_json').html('<pre><code class="json">here will output response</code></pre>');

updateHighlight();

});

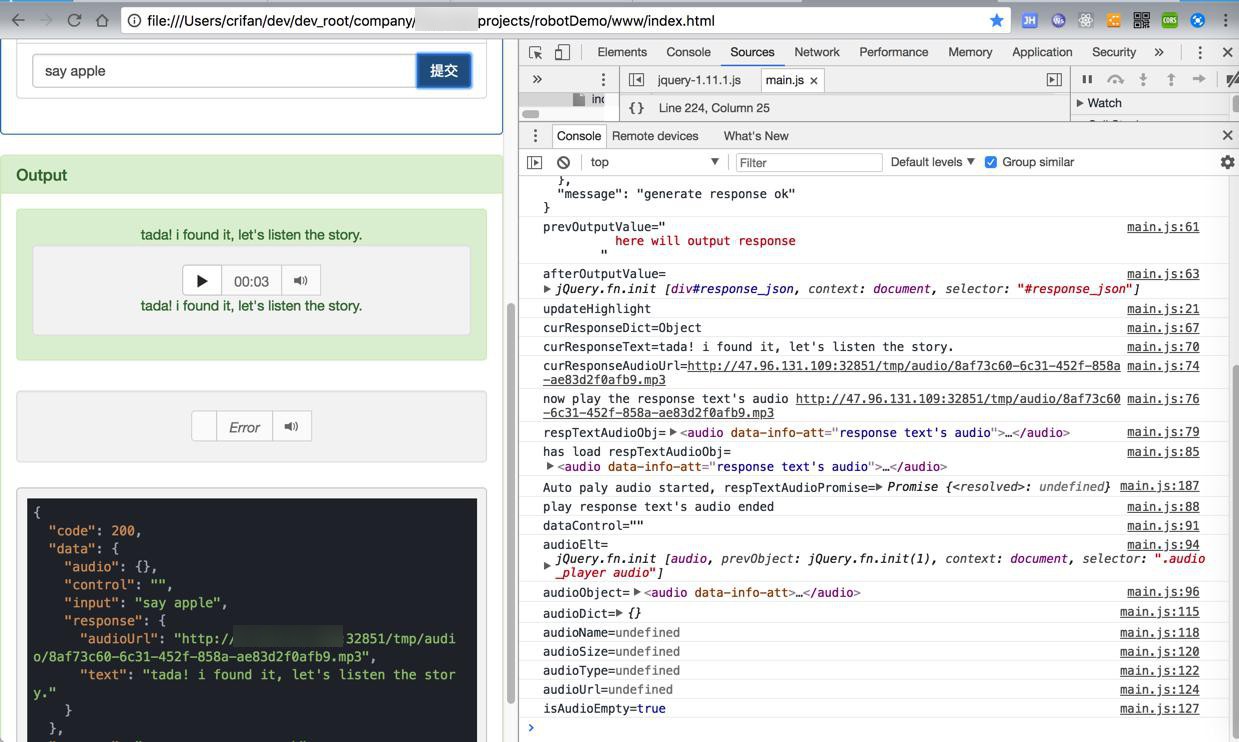

});效果:

点击提交后,后端生成临时的mp3的文件,返回到前端,前端可以正常加载并播放:

转载请注明:在路上 » 【已解决】用和适在线的语言合成接口把文字转语音