折腾:

【已解决】用gunicorn的gevent解决之前多worker多Process线程的单例的数据共享

之后,虽然用gunicorn的gevent解决了Flask的app的单例的数据共享问题,但是却发现另外还有2个Process线程,导致单例失效:每个Process中,创建的单例都不同。

需要去搞清楚原因:

然后搜:

gMsTtsTokenSingleton=

发现:

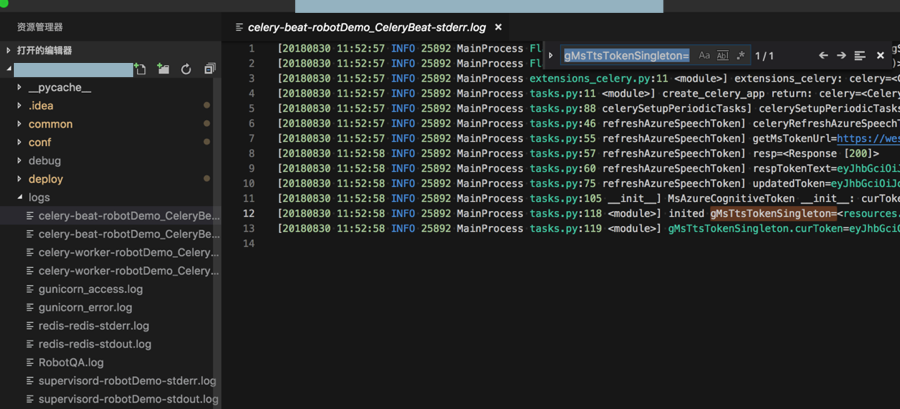

logs/celery-beat-robotDemo_CeleryBeat-stderr.log

只有一个单例:

但是Flask的app中单例无效:

竟然初始化了3次,且都是不同的实例:

<code>[2018-08-30 11:52:58,365 INFO 25892 MainProcess tasks.py:118 <module>] inited gMsTtsTokenSingleton=<resources.tasks.MsTtsTokenSingleton object at 0x7f42df1048d0> [2018-08-30 11:52:58,388 INFO 25895 MainProcess tasks.py:118 <module>] inited gMsTtsTokenSingleton=<resources.tasks.MsTtsTokenSingleton object at 0x7fc5dc7268d0> [2018-08-30 11:52:58,985 INFO 25906 MainProcess tasks.py:118 <module>] inited gMsTtsTokenSingleton=<resources.tasks.MsTtsTokenSingleton object at 0x7f7e8ee69710> </code>

不过,可见process的id不同:25892,25895,25906

分别是不同的process,虽然process的name都一样,都是MainProcess

所以问题来了:

此处gunicorn:

worker_class = ‘gevent’

workers = 1 #进程数

的确是单个worker了

但是Flask的app,却出现了3个process进程

导致三个进程中,单例不同

要搞清楚为何会有3个process

此处继续去给log加上thread的信息:

https://docs.python.org/2/library/logging.html#logrecord-attributes

thread | %(thread)d | Thread ID (if available). |

threadName | %(threadName)s | Thread name (if available). |

-》

<code>LOG_FORMAT = "[%(asctime)s %(levelname)s %(process)d %(processName)s %(thread)d %(threadName)s %(filename)s:%(lineno)d %(funcName)s] %(message)s" </code>

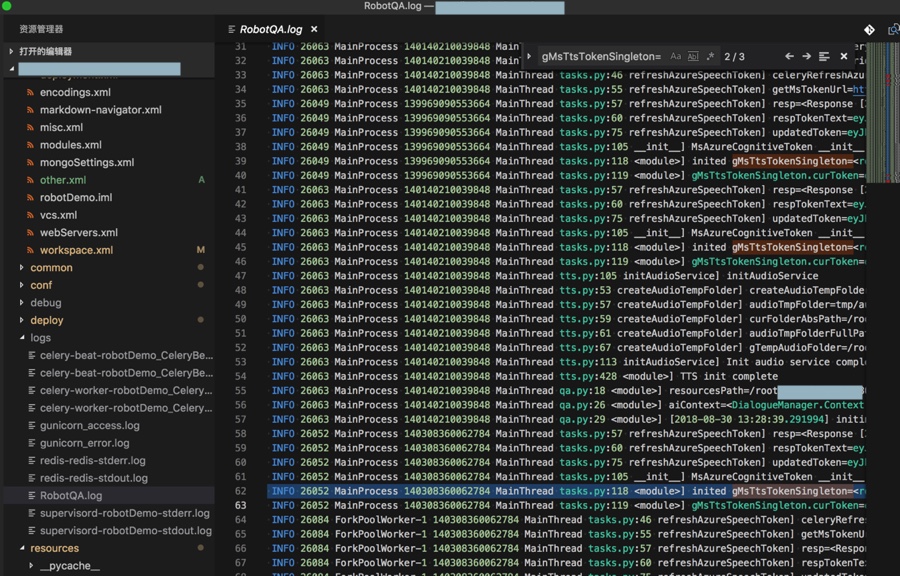

结果当然和前面一样,还是有3个process,其中每个都有个thread:

<code>[2018-08-30 13:28:37,129 INFO 26049 MainProcess 139969090553664 MainThread tasks.py:118 <module>] inited gMsTtsTokenSingleton=<resources.tasks.MsTtsTokenSingleton object at 0x7f4d0b32e128> [2018-08-30 13:28:38,078 INFO 26063 MainProcess 140140210039848 MainThread tasks.py:118 <module>] inited gMsTtsTokenSingleton=<resources.tasks.MsTtsTokenSingleton object at 0x7f74ea6d9710> [2018-08-30 13:28:39,545 INFO 26052 MainProcess 140308360062784 MainThread tasks.py:118 <module>] inited gMsTtsTokenSingleton=<resources.tasks.MsTtsTokenSingleton object at 0x7f9c09443908> </code>

而为何gunicorn的worker是1,结果此处为何还是3个process,还是3个singleton

突然想到:

或许是由于supervisor去启动的celery的work中的task.py

其中有3个进程?

或者是:

单个worker中,内部其他模块,比如:

resources/tts.py

<code>from resources.tasks import gMsTtsTokenSingleton </code>

调用tasks.py中的gMsTtsTokenSingleton,导致产生多个:

去分析log找找原因。

此处暂时没找到原因。

继续找原因

去找找,,除了第一个线程:26049

第二个:26063

第三个:26052

都是怎么生成的,对应线程的第一个log信息的文件是哪个

<code>[2018-08-30 13:28:35,849 INFO 26063 MainProcess 140140210039848 MainThread FlaskLogSingleton.py:54 <module>] LoggerSingleton inited, logSingleton=<common.FlaskLogSingleton.LoggerSingleton object at 0x7f74eb1b5c18> [2018-08-30 13:28:35,271 INFO 26052 MainProcess 140308360062784 MainThread FlaskLogSingleton.py:54 <module>] LoggerSingleton inited, logSingleton=<common.FlaskLogSingleton.LoggerSingleton object at 0x7f9c08e71048> </code>

对应着,应该是别的文件:

from common.FlaskLogSingleton import log

从而导致去初始化log的

注意到了:

resources/tasks.py

中也有:

<code>from common.FlaskLogSingleton import log </code>

-》想起来了:

supervisor去管理celery,中调用了:

<code>[program:robotDemo_CeleryWorker] command=/root/.local/share/virtualenvs/robotDemo-dwdcgdaG/bin/celery worker -A resources.tasks.celery [program:robotDemo_CeleryBeat] command=/root/.local/share/virtualenvs/robotDemo-dwdcgdaG/bin/celery beat -A resources.tasks.celery --pidfile /var/run/celerybeat.pid -s /xxx/robotDemo/runtime/celerybeat-schedule </code>

其中的:

celery worker -A resources.tasks.celery

和

celery beat -A resources.tasks.celery

导致了另外两个的process的产生

后来发现通过:

/celery-beat-robotDemo_CeleryBeat-stderr.log

<code>[20180830 01:28:35 INFO 26049 MainProcess 139969090553664 MainThread FlaskLogSingleton.py:54 <module>] LoggerSingleton inited, logSingleton=<common.FlaskLogSingleton.LoggerSingleton object at 0x7f4d0add4080> </code>

和:

celery-worker-robotDemo_CeleryWorker-stderr.log

<code>[20180830 01:28:35 INFO 26052 MainProcess 140308360062784 MainThread FlaskLogSingleton.py:54 <module>] LoggerSingleton inited, logSingleton=<common.FlaskLogSingleton.LoggerSingleton object at 0x7f9c08e71048> </code>

验证了:

26049和26052就是另外的2个Process

就是此处要找到的,是celery的worker和beat产生了另外的2个线程

现在就是要去解决:

如何保证,在celery额外产生2个Process前提下,能否让gevent的但worker的gunicorn中的Flask的app的单例可以正常工作

singleton not work for celery multiple process

想到一个,可能改善,减少进程的方法:

利用之前:

http://docs.celeryproject.org/en/latest/reference/celery.bin.worker.html#cmdoption-celery-worker-b

celery的worker中,可以额外也加上beat

-》这样就不用单独运行celery beat的进程了?

-》这样单个进程就同时处理了 celery的worker和beat?

不过刚注意到,还有个:

http://docs.celeryproject.org/en/latest/reference/celery.bin.celery.html

以为之前命令:

<code>bin/celery worker -A resources.tasks.celery celery beat -A resources.tasks.celery --pidfile /var/run/celerybeat.pid -s /root/naturling_20180101/web/server/robotDemo/runtime/celerybeat-schedule </code>

是调用的celery呢,不过发现:

没有这些参数,而是:

command中,传入:

worker,调用:u’worker’: <class ‘celery.bin.worker.worker’>

beat,调用:u’beat’: <class ‘celery.bin.beat.beat’>

不过这个办法不合适,因为:

http://docs.celeryproject.org/en/latest/reference/celery.bin.worker.html#cmdoption-celery-worker-b

“Note

-B is meant to be used for development purposes. For production environment, you need to start celery beat separately.”

只是用于开发环境测试用,生产环境中,应该用,此处本来就已用的:celery beat 单独的进程。

singleton not work for celery multiple process

celery multiple process cause singleton not work

Using a Singleton in a Celery worker – Google Groups

Signals — Celery 4.2.0 documentation

理论上,可以用celery的signal去实现,把共享数据放到特定task中,让其他地方调用的?

不过暂时不去这么弄,太麻烦。

Celery: Task Singleton? – Stack Overflow

python – Django & Celery use a singleton class instance attribute as a global – Stack Overflow

python – Celery Beat: Limit to single task instance at a time – Stack Overflow

My Experiences With A Long-Running Celery-Based Microprocess

至此,貌似没什么太好的办法,能否在:

celery的额外的2个Process:worker和beat,和Flask的app的Process中,完美实现多线程的单例。

转载请注明:在路上 » 【未解决】Flask中gunicorn部署和supervisor管理celery的worker导致多线程导致单例失效