折腾:

【记录】演示如何实现简单爬虫:用Python提取百度首页中百度热榜内容列表

期间,继续去尝试用Python的爬虫框架,比如PySpider,去爬取百度热榜的内容列表。

先去安装:

【已解决】Mac中给Python3安装PySpider

然后再去启动PySpider:

【已解决】Mac中启动PySpider

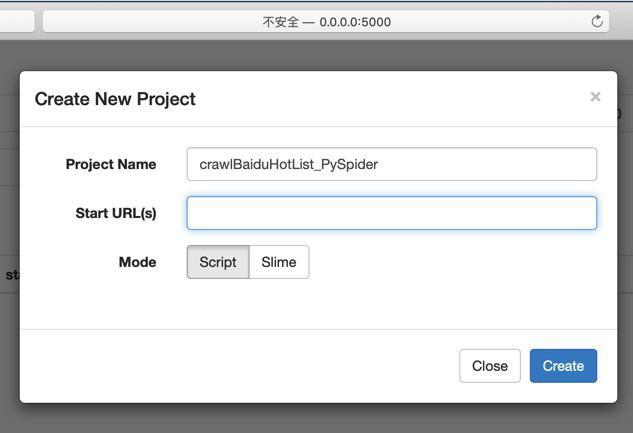

crawlBaiduHotList_PySpider

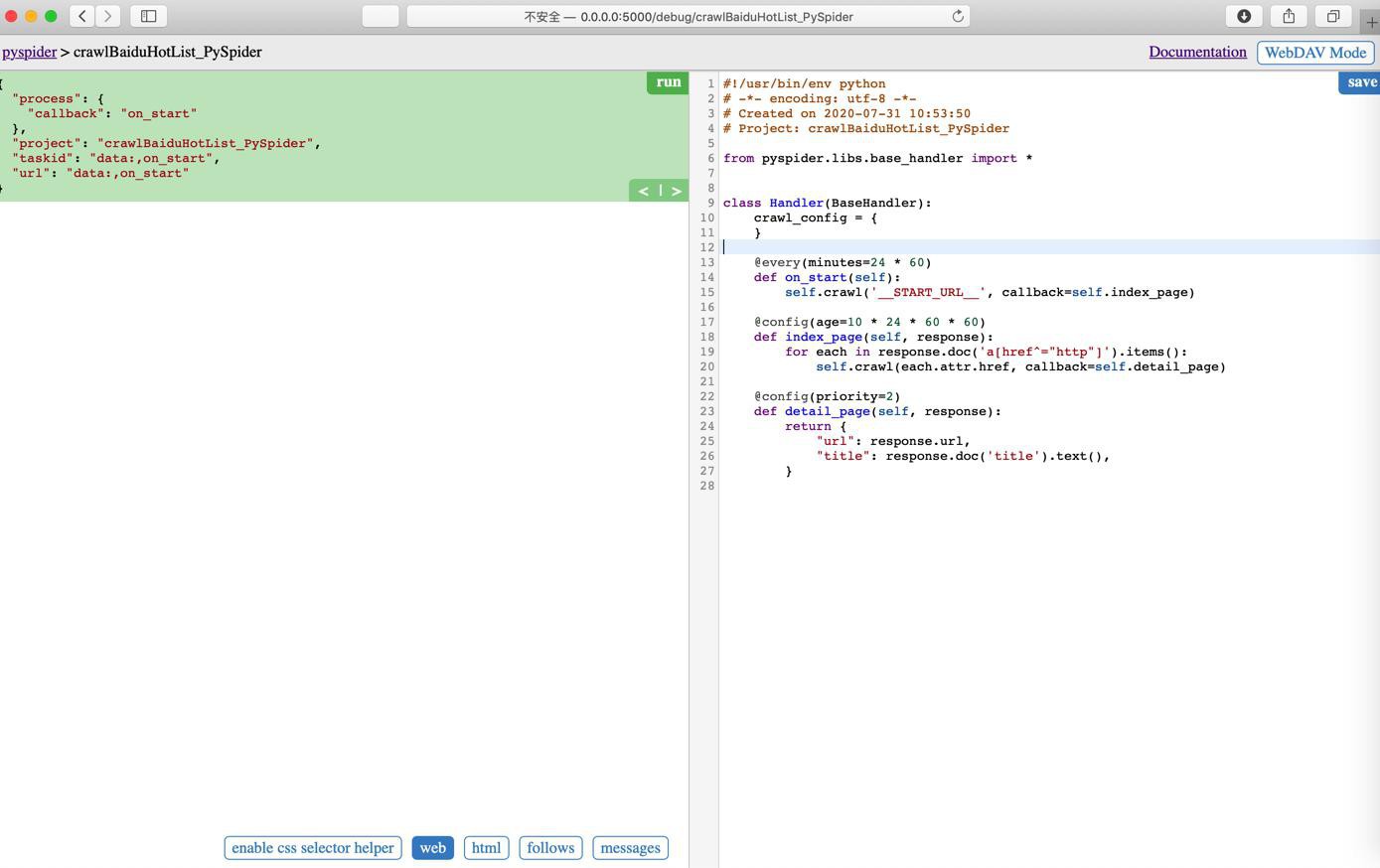

默认代码:

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

# Created on 2020-07-31 10:53:50

# Project: crawlBaiduHotList_PySpider

from pyspider.libs.base_handler import *

class Handler(BaseHandler):

crawl_config = {

}

@every(minutes=24 * 60)

def on_start(self):

self.crawl('__START_URL__', callback=self.index_page)

@config(age=10 * 24 * 60 * 60)

def index_page(self, response):

for each in response.doc('a[href^="http"]').items():

self.crawl(each.attr.href, callback=self.detail_page)

@config(priority=2)

def detail_page(self, response):

return {

"url": response.url,

"title": response.doc('title').text(),

}

然后去调试和写代码实现爬虫

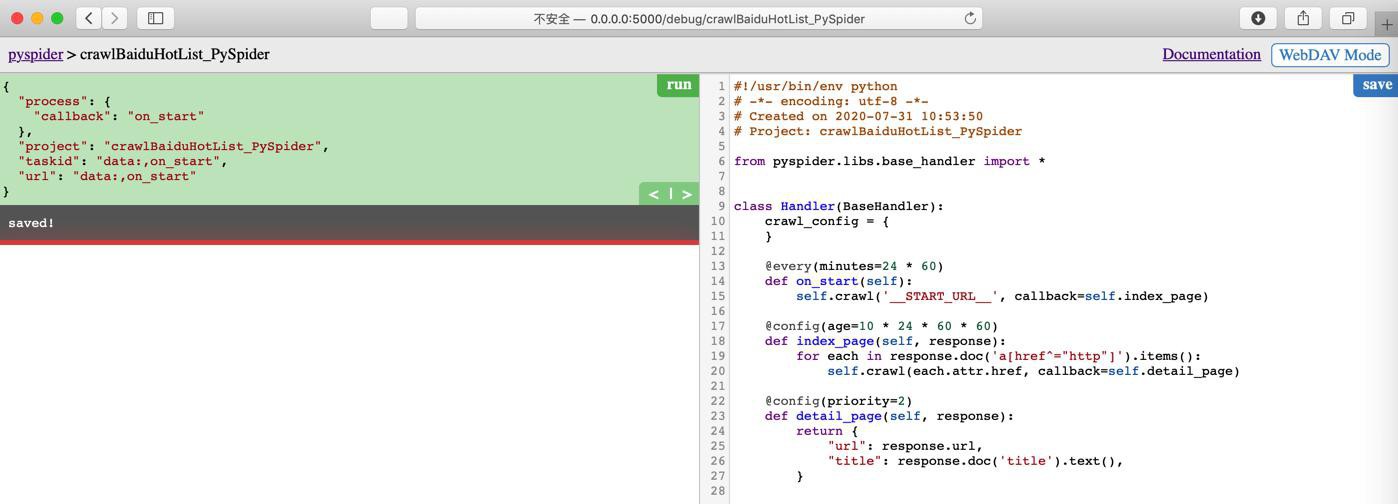

先点击Save去保存代码

再去修改代码。

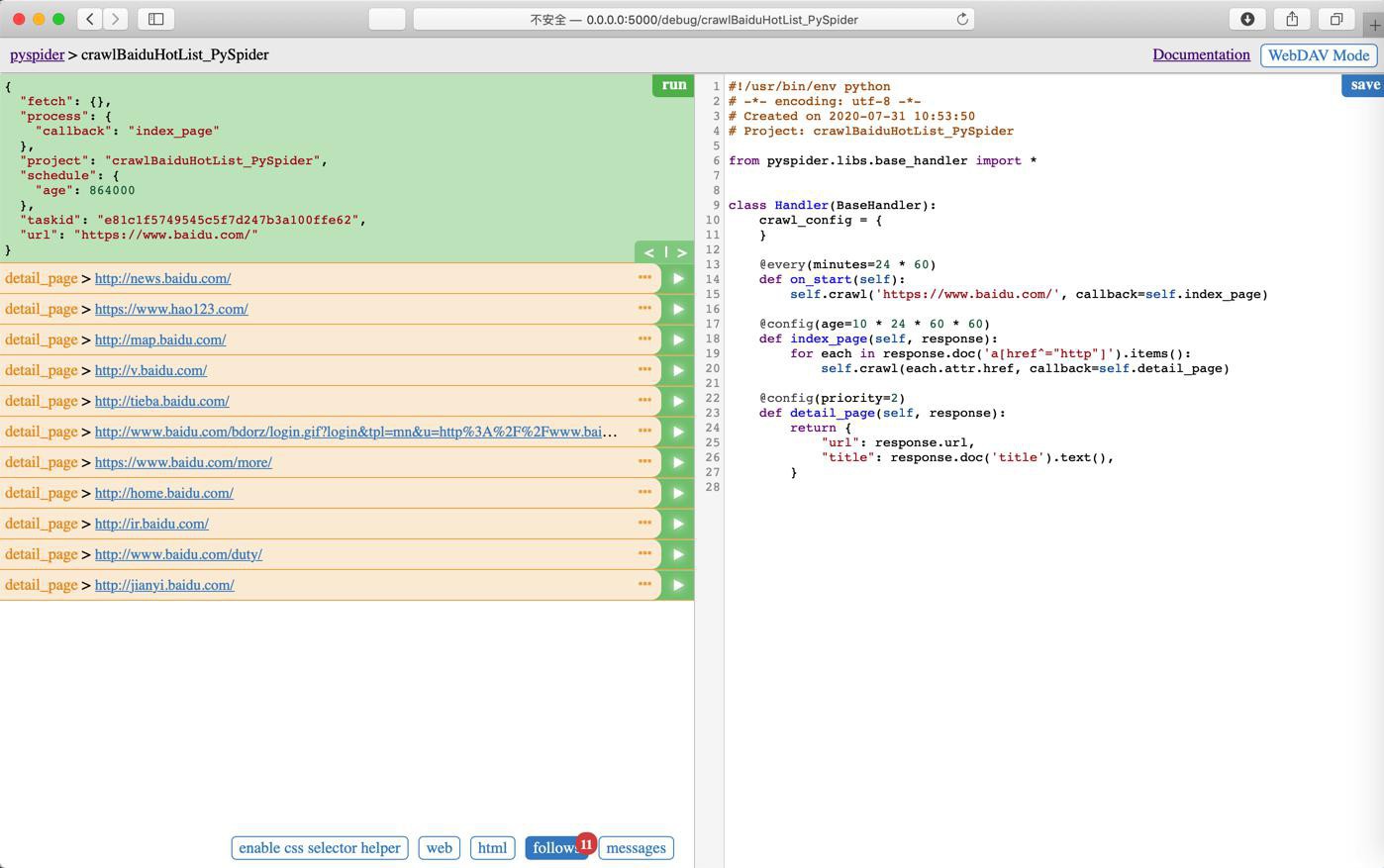

地址改为百度首页

点击Run,看到运行效果是:

不过此处不需要后续的代码

去掉,改为自己的逻辑

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

# Created on 2020-07-31 13:40:00

# Project: crawlBaiduHotList_PySpider

from pyspider.libs.base_handler import *

class Handler(BaseHandler):

crawl_config = {

}

# @every(minutes=24 * 60)

def on_start(self):

self.crawl('https://www.baidu.com/', callback=self.baiduHome)

# @config(age=10 * 24 * 60 * 60)

def baiduHome(self, response):

titleItemList = response.doc('span[class="title-content-title"]').items()

print("titleItemList=%s" % titleItemList)

for eachItem in titleItemList:

print("eachItem=%s" % eachItem)

itemTitleStr = eachItem.text()

print("itemTitleStr=%s" % itemTitleStr)

return {

"百度热榜标题": itemTitleStr

}写好代码,去调试运行,结果:

【已解决】PySpider抓包百度热榜标题列表结果

不过此处只返回单个,所以需要改为多次返回出单个结果:

【已解决】PySpider中如何在单个页面返回多个结果保存到自带的Results页面中的列表中

【总结】

最后终于用PySpider去实现了:

- 获取百度首页网站源码

- 解析提取所需内容

- 返回内容=导出结果

- 到自带的results中

- 支持点击下载结果为:CSV文件

完整代码:

#!/usr/bin/env python

# -*- encoding: utf-8 -*-

# Created on 2020-07-31 15:01:00

# Project: crawlBaiduHotList_PySpider_1501

from pyspider.libs.base_handler import *

from pyspider.database import connect_database

class Handler(BaseHandler):

crawl_config = {

}

# @every(minutes=24 * 60)

def on_start(self):

UserAgent_Chrome_Mac = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36"

curHeaderDict = {

"User-Agent": UserAgent_Chrome_Mac,

}

self.crawl('https://www.baidu.com/', callback=self.baiduHome, headers=curHeaderDict)

# @config(age=10 * 24 * 60 * 60)

def baiduHome(self, response):

# for eachItem in response.doc('span[class="title-content-title"]').items():

titleItemGenerator = response.doc('span[class="title-content-title"]').items()

titleItemList = list(titleItemGenerator)

print("titleItemList=%s" % titleItemList)

# for eachItem in titleItemList:

for curIdx, eachItem in enumerate(titleItemList):

print("[%d] eachItem=%s" % (curIdx, eachItem))

itemTitleStr = eachItem.text()

print("itemTitleStr=%s" % itemTitleStr)

curUrl = "%s#%d" % (response.url, curIdx)

print("curUrl=%s" % curUrl)

curResult = {

# "url": response.url,

# "url": curUrl,

"百度热榜标题": itemTitleStr,

}

# return curResult

# self.send_message(self.project_name, curResult, url=response.url)

self.send_message(self.project_name, curResult, url=curUrl)

def on_message(self, project, msg):

print("on_message: msg=", msg)

return msg供参考。